What is the price for tomatoes?

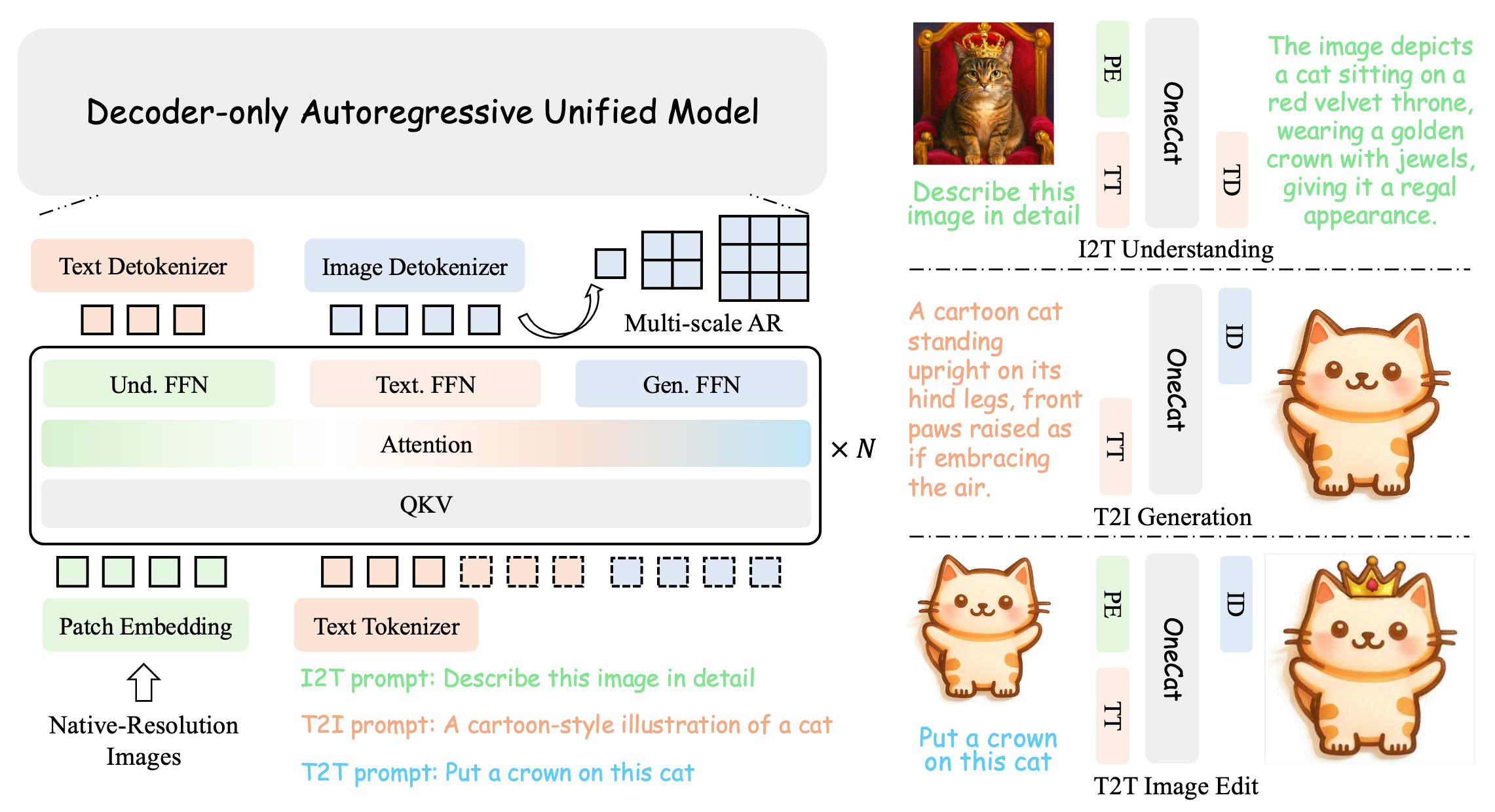

We introduce OneCAT, the open-source unified multimodal model that seamlessly integrates understanding, generation, and editing within a novel, pure decoder-only transformer architecture. Our framework uniquely eliminates the need for external components such as Vision Transformers (ViT) or Visual Tokenizer during inference, leading to significant efficiency gains and setting a new performance standard for unified multimodal intelligence.

Overview

Pure Decoder-Only Design

Eliminates external vision encoders and VAE tokenizers during inference, using only a lightweight patch embedding layer for raw image processing.

Mixture-of-Experts (MoE)

Three specialized FFN experts: Text FFN for language comprehension, Understanding FFN for visual tokens, and Generation FFN for image synthesis.

Multi-Scale Autoregressive

Pioneer Next Scale Prediction paradigm that generates images coarse-to-fine, drastically reducing generation steps compared to diffusion models.

Example Showcase

Chat & Visual Question Answering

Text-to-Image Generation

Instruction-based Image Editing

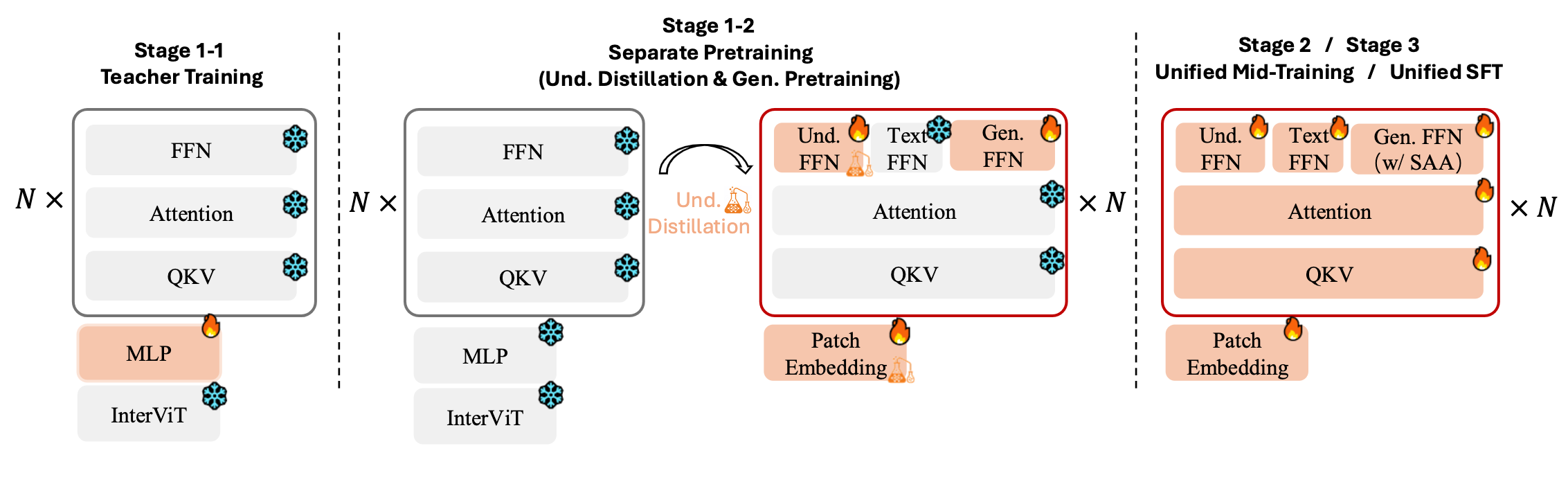

Training Pipeline

Stage 1: Separate Pretraining

Understanding Distillation: 436M image-text pairs with teacher-student distillation using InterViT teacher model

Generation Pretraining: 51M text-to-image samples with Next-Scale Prediction loss

Stage 2: Unified Mid-Training

Unified training across all tasks with Scale-Aware Adapter integration for generation. Native resolution strategy for both understanding and generation with dynamic aspect ratios.

Stage 3: Supervised Fine-Tuning

High-quality instruction-following data with expanded generation resolution support

Multimodal Understanding

| Model | A-LLM | Vis. | MME-P ↑ | MME-S ↑ | MMBench ↑ | MMMU ↑ | MM-Vet ↑ | MathVista ↑ | SEED ↑ |

|---|---|---|---|---|---|---|---|---|---|

| Encoder-based Understanding Only Models | |||||||||

| InternVL2 | 1.8B | 0.3B | 1440 | 1877 | 73.2 | 34.3 | 44.6 | 46.4 | 71.6 |

| InternVL2.5 | 1.8B | 0.3B | — | 2138 | 74.7 | 43.6 | 60.8 | 51.3 | — |

| Qwen2-VL | 1.5B | 0.6B | — | 1872 | 74.9 | 41.1 | 49.5 | 43.0 | — |

| Qwen2.5-VL | 3B | 0.6B | — | 2157 | 79.1 | 53.1 | 61.8 | 62.3 | — |

| Encoder-free Understanding Only Models | |||||||||

| Mono-InternVL | 1.8B | / | — | 1875 | 65.5 | 33.7 | 40.1 | 45.7 | 67.4 |

| EvE | 7B | / | — | 1628 | 52.3 | 32.6 | 25.7 | — | 64.6 |

| EvEv2.0 | 7B | / | — | 1709 | 66.3 | 39.3 | 45.0 | — | 71.4 |

| HoVLE | 2.6B | / | — | 1864 | 71.9 | 33.7 | 44.3 | 46.2 | 70.7 |

| VoRA | 7B | / | 1363 | 1674 | 64.2 | 32.2 | 33.7 | - | 67.5 |

| SAIL | 7B | / | - | 1719 | 70.1 | - | 46.3 | 57.0 | 72.9 |

| Unified Models | |||||||||

| Chameleon | 7B | - | — | — | 35.7 | 28.4 | 8.3 | — | 30.6 |

| Emu3 | 8B | 0.3B | — | — | 58.5 | 31.6 | 37.2 | — | 68.2 |

| Harmon | 1.5B | 0.9B | 1155 | 1476 | 65.5 | 38.9 | — | — | 67.1 |

| Show-o2 (1.5B) | 1.5B | 0.5B | 1450 | — | 67.4 | 37.1 | — | — | 65.6 |

| Janus-Pro (1.5B) | 1.5B | 0.3B | 1444 | — | 75.5 | 36.3 | 39.8 | — | — |

| ILLUME+ | 3B | 0.6B | 1414 | — | 80.8 | 44.3 | 40.3 | — | 73.3 |

| VILA-U | 7B | 0.4B | 1401 | — | — | — | 33.5 | — | 59.0 |

| Janus-Pro (7B) | 7B | 0.3B | 1567 | — | 79.2 | 41.0 | 50.0 | — | — |

| Tar | 7B | 0.4B | 1571 | 1926 | 74.4 | 39.0 | — | — | — |

| Show-o2 (7B) | 7B | 0.5B | 1620 | — | 79.3 | 48.9 | — | — | 69.8 |

| OneCAT-3B | 3B | / | 1630 | 2051 | 78.8 | 41.9 | 52.2 | 61.7 | 72.5 |

Higher is better. Best and second-best are highlighted (across unified models).

Text-to-Image Generation

| Model | Params | GenEval Overall | DPG-Bench Overall |

|---|---|---|---|

| Emu3-8B | 8B | 0.66 | 81.60 |

| Janus-Pro-7B | 7B | 0.80 | 84.19 |

| Mogao-7B | 7B | 0.89 | 84.33 |

| BAGEL-7B | 7B | 0.82 | — |

| BAGEL-7B† | 7B | 0.88 | — |

| Show-o2-7B | 7B | 0.76 | 86.14 |

| Tar-7B | 7B | 0.84 | 84.19 |

| BLIP3-o-8B | 8B | 0.84 | 81.60 |

| OneCAT-3B | 3B | 0.90 | 84.53 |

GenEval and DPG-Bench results. Best and second-best are highlighted. † indicates prompt rewriting where applicable.

Image Editing

| Model | Add | Adjust | Extract | Replace | Remove | Background | Style | Hybrid | Action | Overall |

|---|---|---|---|---|---|---|---|---|---|---|

| MagicBrush | 2.84 | 1.58 | 1.51 | 1.97 | 1.58 | 1.75 | 2.38 | 1.62 | 1.22 | 1.90 |

| Instruct-Pix2Pix | 2.45 | 1.83 | 1.44 | 2.01 | 1.50 | 1.44 | 3.55 | 1.20 | 1.46 | 1.88 |

| AnyEdit | 3.18 | 2.95 | 1.88 | 2.47 | 2.23 | 2.24 | 2.85 | 1.56 | 2.65 | 2.45 |

| UltraEdit | 3.44 | 2.81 | 2.13 | 2.96 | 1.45 | 2.83 | 3.76 | 1.91 | 2.98 | 2.70 |

| Step1X-Edit | 3.88 | 3.14 | 1.76 | 3.40 | 2.41 | 3.16 | 4.63 | 2.64 | 2.52 | 3.06 |

| ICEdit | 3.58 | 3.39 | 1.73 | 3.15 | 2.93 | 3.08 | 3.84 | 2.04 | 3.68 | 3.05 |

| OmniGen | 3.47 | 3.04 | 1.71 | 2.94 | 2.43 | 3.21 | 4.19 | 2.24 | 3.38 | 2.96 |

| OmniGen2 | 3.57 | 3.06 | 1.77 | 3.74 | 3.20 | 3.57 | 4.81 | 2.52 | 4.68 | 3.44 |

| BAGEL-7B | 3.56 | 3.31 | 1.70 | 3.30 | 2.62 | 3.24 | 4.49 | 2.38 | 4.17 | 3.20 |

| UniWorld-V1-20B | 3.82 | 3.64 | 2.27 | 3.47 | 3.24 | 2.99 | 4.21 | 2.96 | 2.74 | 3.26 |

| OneCAT-3B | 3.65 | 3.70 | 2.42 | 3.92 | 3.00 | 3.79 | 4.61 | 2.23 | 3.53 | 3.43 |

ImgEdit-Bench results. Best and second-best are highlighted.

Efficiency Analysis

| Model | Resolution of Input Image | #Text Tokens | #Visual Tokens | TTFT (s) | Reduction |

|---|---|---|---|---|---|

| Qwen2.5-VL-3B | 768 × 768 | 24 | 731 | 0.135 | 50.4% |

| OneCAT-3B | 768 × 768 | 24 | 731 + 256 | 0.067 | |

| Qwen2.5-VL-3B | 1024 × 1024 | 24 | 1395 | 0.216 | 57.4% |

| OneCAT-3B | 1024 × 1024 | 24 | 1395 + 256 | 0.092 | |

| Qwen2.5-VL-3B | 1792 × 1792 | 24 | 4098 | 0.583 | 61.4% |

| OneCAT-3B | 1792 × 1792 | 24 | 4098 + 256 | 0.225 |

Efficiency comparison of OneCAT-3B and Qwen2.5-VL-3B (Prefilling). TTFT = Time To First Token.

| Model | Resolution of Generated Image | T2I Infer. Time (s) | Edit Infer. Time (s) |

|---|---|---|---|

| BAGEL-7B | 512 × 512 | 8.762 | 13.447 |

| OneCAT-3B | 512 × 512 | 1.40 | 2.03 |

| BAGEL-7B | 1024 × 1024 | 26.293 | 46.444 |

| OneCAT-3B | 1024 × 1024 | 2.85 | 4.61 |

Generation efficiency comparison of OneCAT-3B and BAGEL-7B. T2I = Text-to-Image.